Last week, after a call with the engineers on my team I wanted to send a message to two of the engineers at the same time - little did I know I’d find what I believe to be a nasty privacy exploit in Slack - one that made me ask “Why is this even a feature?”

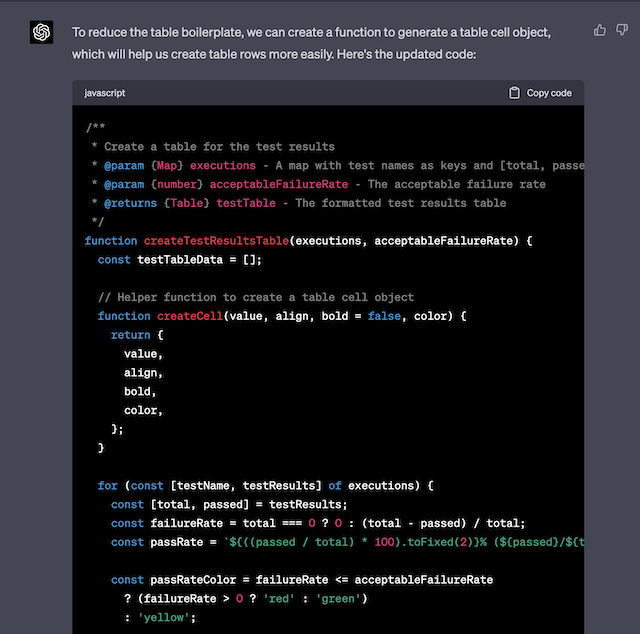

Like any good responsible software engineer, instead of taking to social media or forums to post about the exploit - I opted to report it to HackerOne - where Slack accepts reports of potential security exploits. After giving a detailed list of instructions on how to achive it (Report #2171907).

Shortly after (within 40 minutes) I recieved a reply which slightly dumbfounded me - the full reply is below, but began “We have reviewed your submission, and while this behavior is not perfectly ideal, it is intended.”

After asking some people in the Infosec community what is the right next step, I was advised that I’m within my rights to inform about this, mostly to give fair warning to Slack users that this exploit exists.

Unforeseen Consequences

So what was this exploit I found - in some way it’s so deliciously simple, that I had to double check it actually happened. I found it by using Slack “as intended” - but the result was not what was expected, with an unclear UI about the consequences - and I believe for most users who may experience it, would not realise what has happened. Certainly in the moment it could cause real harm to users, under certain circumstances.

The list of actions is as follows:

- Click on a user in Direct Messages

- Click on the user name at the top where the chevron is located

- Click "Add people to this coversation" (up to 9 people)

- Add one or more users to the conversation

You will now receive a popup with several options - in this case, because I couldn’t find the original DM with the pair of engineers in the UI it seemed like the option “Include conversation history?” seemed sensible.

In hindsight, it does have a message at the top of the screen - but in the moment as many know, these messages when not labeled as warnings can be missed or ignored by users - or any bad actor with access to the appropriate Slack account

So now a private DM conversation, going all the way back to the beginning of this history has now been shared with all the people added to it, attachments and all.

The person in the DM thread received no consent to allow this action.

But what now makes it worse is that this whole DM is now a thread, and a thread can be turned into a private channel.

Once turned into a private channel, the original person in the DM can be removed from the entire room - no longer having access to any of the messages. Also as a channel a much larger number of people (like a whole organisation) can be added to it.

OK, what now?

So the functionality is working as intended.

But what if I’m a disgruntled employee who has some DMs with a board member who was sharing internal confidential information? Or a bully who happens to find an unlocked computer of a fellow employee they know is in a secret relationship? Or a hacker who has managed to get into a key account of a Slack that hasn’t enabled 2FA, or via a magic link in their email account because of a weak password?

Its not like unauthorised access to systems is not a thing …

Slack’s reply and caveats

To be fair to Slack, I will include the response below where they include their reasons why this is “as intended”.

I’ll leave it up to you the reader to decide if it’s enough of a mitigation, or if many companies would even have the processes to begin to deal with this an an immediate threat - I can guess many companies using it don’t even have a dedicated CSO, team managing slack - and likely use default settings.

Thank you for your report.

We have reviewed your submission, and while this behavior is not perfectly ideal, it is intended. In this “attack”, a user must have the permission to create private Channels, and this can be restricted by Owners/Admins.

In addition, the DM messages are not truly deleted when performing this behavior. An Admin/Owner with the necessary permissions to manage private Channels can always access the content within private Channels if necessary.

For these reasons, we are relatively satisfied with our security at this time, and we will be closing this report as Informative. Regardless, we appreciate your efforts here, and hope you continue to submit to our program.

Thanks, and good luck with your future bug hunting.